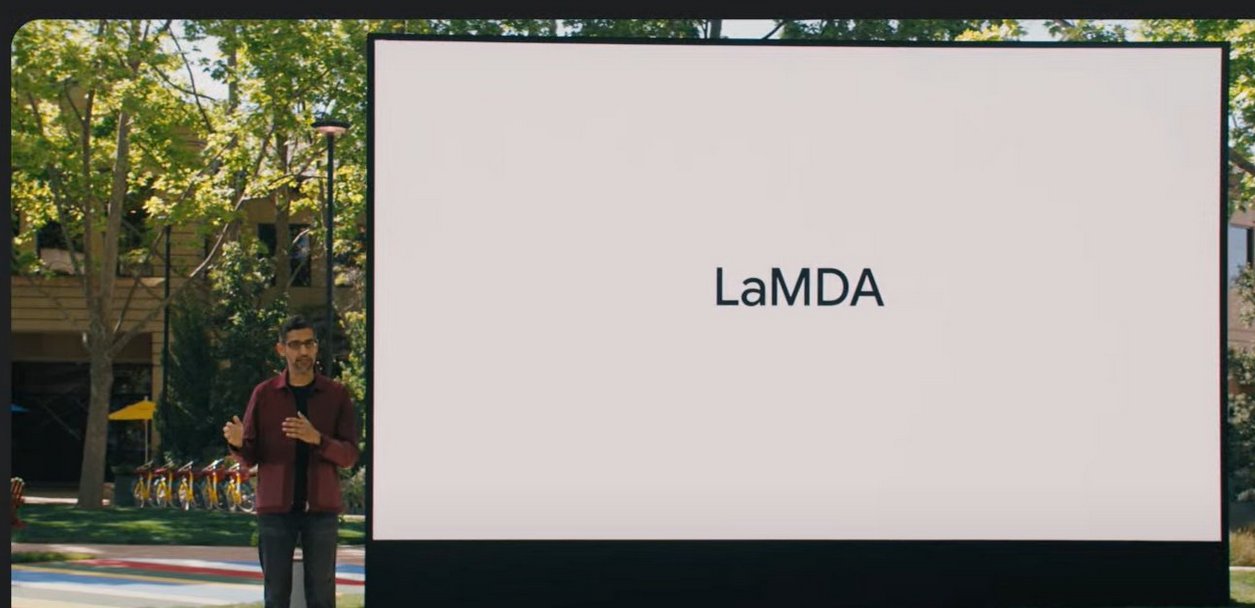

LaMDA is the next breakthrough of Google’s natural language understanding technology for dialogue applications. Google CEO Sundar Pichai unveiled the product at Google/IO 2021.

Google has been working on a new language model called LaMDA (“Language Model for Dialogue Applications”)

that’s much better at following conversations in a natural way, rather than as a series of badly formed search queries.

Sundar Pichai said Google has used AI to improve the core Search experience for billions of people and still there moments when computers just don’t understand as language is endlessly complex.

“Today I am excited to share our latest research in natural language understanding: LaMDA. LaMDA is a language model for dialogue applications. It’s open domain, which means it is designed to converse on any topic,” said Sundar Pichai.

Converse normally

AI systems have come in their ability to recognise what you’re saying and respond But still, AI platforms will become easily confused unless you speak carefully and literally. LaMDA is meant to be able to converse normally about almost anything without any kind of prior training. LaMDA can make information and computing radically more accessible and easier to use.

Google CEO said LaMDA is an open domain, which means it is designed to converse on any topic, for example, LaMDA understands quite a bit about the planet Pluto.

“So if a student wanted to discover more about space, they could ask about Pluto and the model would give sensible responses, making learning even more fun and engaging. If that student then wanted to switch over to a different topic — say, how to make a good paper airplane — LaMDA could continue the conversation without any retraining,” he said.

It is interesting to note that Google has been researching and developing language models for many years. Here, the company has focused on ensuring LaMDA meets its incredibly high standards on fairness, accuracy, safety and privacy, and that it is developed consistently with its AI Principles.

“And we look forward to incorporating conversation features into products like Google Assistant, Search and Workspace, as well as exploring how to give capabilities to developers and enterprise customers,” said Sundar Pichai.

People communication

Onstage at Google I/O, Sundar Pichai showed off how LaMDA could take on the persona of Pluto or a paper airplane. In each example, LaMDA was able to respond to questions or comments that don’t have a clear answer.

Google’s natural language understanding technology LaMDA is a huge step forward in natural conversation, but it’s still only trained on text. Here it is a reality that people communicate with each other they do it across images, text, audio and video.

“So we need to build multimodal models (MUM) to allow people to naturally ask questions across different types of information. With MUM you could one day plan a road trip by asking Google to “find a route with beautiful mountain views.” This is one example of how we’re making progress towards more natural and intuitive ways of interacting with Search,” he said.

LaMDA’s conversational skills have been years in the making. Like many recent language models, including BERT and GPT-3, it’s built on Transformer, a neural network architecture that Google Research invented and open-sourced in 2017. That architecture produces a model that can be trained to read many words (a sentence or paragraph, for example), pay attention to how those words relate to one another and then predict what words it thinks will come next.

Google has made it clear that its highest priority when creating technologies like LaMDA is to ensure minimise risks of misusing language as it is one of humanity’s greatest tools.