5G is accelerating deployments of edge computing, as communications service providers (CSPs) look to rearchitect their network infrastructure and grow the capacity of their networks as well as add new services. As technology evolves towards containers and cloud-native architectures and applications, there are key architectural considerations for CSPs to address.

The case for edge

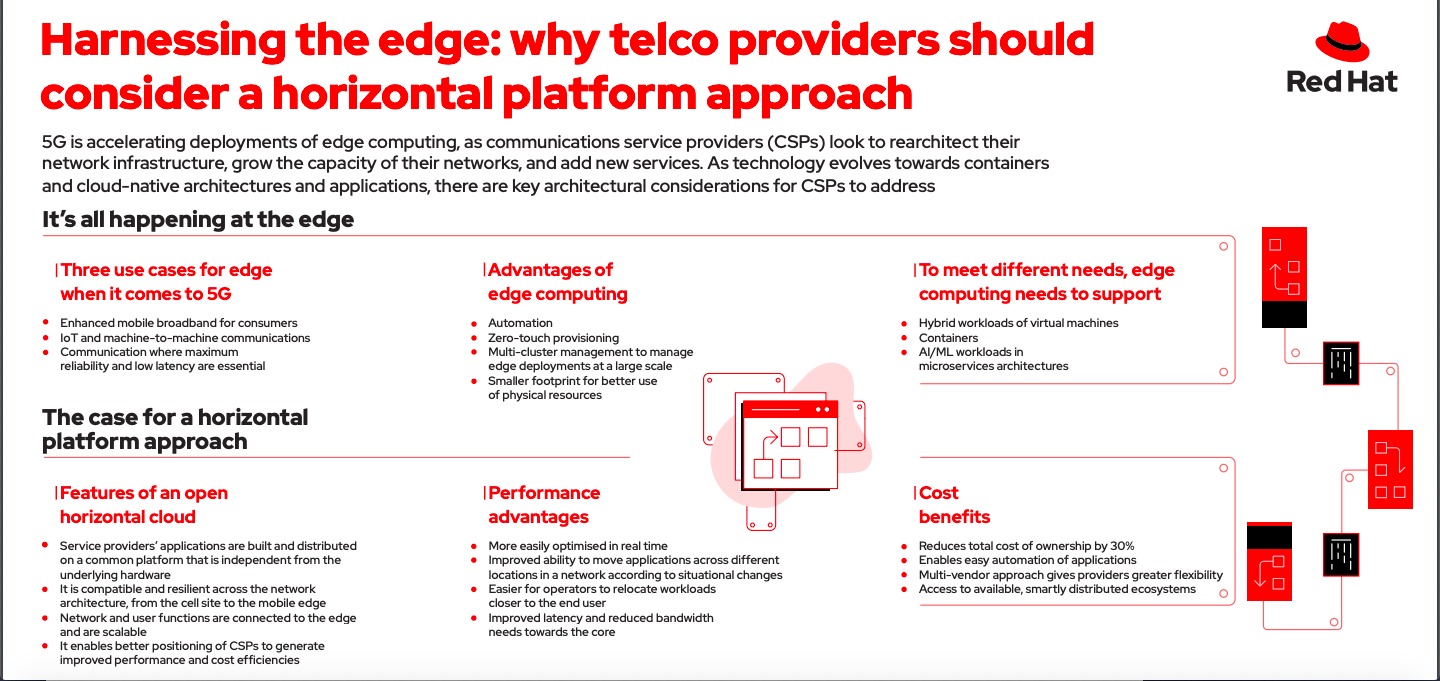

There are three key use cases when it comes to 5G: 1) enhanced mobile broadband for consumers who are eating up ever-increasing amounts of data; 2) IoT and machine-to-machine communications, and 3) communications where maximum reliability and low latency is essential, such as applications for self-driving cars and remote surgery. As service providers scale to meet the demands of more users and new bandwidth hungry applications, the need for compute power that sits closer to the end user (at the edge) becomes paramount.

The advantages of edge computing for operators are numerous, and include automation, zero-touch provisioning and multi-cluster management to be able to manage edge deployments at a large scale, as well as smaller footprint for better utilization of physical resources. However, edge computing is not a strategy on its own; it needs to form part of a wider hybrid cloud strategy.

Open hybrid cloud enables service providers to onboard any workload on any footprint (whether public cloud, private cloud, virtualized or bare metal) to any location, from the core data centre to the edge server. To meet the needs of these different scenarios, an edge computing solution should support hybrid workloads of virtual machines (VMs), containers and AI/ML workloads in microservices architectures, including consistent management and orchestration. If the edge were to be managed separately across hundreds of sites, operations would become complex and chaotic.

Service providers need to manage their disparate edge sites in the same way they would manage the rest of their locations in the network, which means treating the edge as a natural extension of a hybrid cloud. Open hybrid cloud deployment provides much needed consistency across the technology ecosystem – from edge devices to the network to the centralised data centre, helping organisations deliver on the operational excellence they’re striving for.

Easing the way for cloud-native application development is the necessary next step to enable service providers to achieve better overall functionality to deliver services to end users faster and ensure optimum performance of applications. This involves careful consideration of the design when implementing a cloud platform.

The case for a horizontal platform approach

A container-based core will enable CSPs to deliver cloud-native 5G networks, with containerised networking apps and modular microservices promising the opportunity to dynamically orchestrate and grow network service capacity across distributed architectures. However, when it comes to implementing a containerised cloud platform, service providers need to decide whether to employ one type of infrastructure in core locations and a different one in distributed sites (a vertical solutions approach), or to use a horizontally integrated cloud across both core and distributed sites.

An open horizontal cloud is one in which a service provider’s applications are built and distributed on a common platform that is independent from the underlying hardware, and therefore compatible and resilient across the network architecture, right from the cell site to the mobile edge. Instead of vertical silos, where applications are specific to a vendor, network and user functions are connected to the edge and scalable through a horizontal cloud platform, better positioning CSPs to generate improved performance and cost efficiencies.

Performance

Containers are portable across a hybrid environment. Using a horizontal cloud, performance can more easily be optimised in real-time, due to an improved ability to move applications across different locations in a network according to situational changes. For example, a sporting event at a large stadium may require temporary lower latency at that location for applications to ensure a seamless end user experience. The premise of a horizontal cloud is it enables operators to more easily relocate workloads closer to the end user (e.g. the edge at or next to the stadium) for improved latency and reduced bandwidth needs towards the core.

Cost

Platforms that are horizontally integrated can also reduce the total cost of ownership (TCO) compared with silo-based, vertically integrated deployments. Horizontal platforms enable applications to be easily automated, while the vertical alternative requires more tools, processes and is more likely to lock service providers into one vendor offering. The multi-vendor approach gives providers greater flexibility and allows them to benefit from an accessible, smartly distributed ecosystem.

When it comes to operating expenses, vertically integrated deployments require the design and running of separate infrastructure silos according to how many software vendors are being used. Whereas according to a recent ACG Research analyst paper, sponsored by Red Hat (https://red.ht/2KmULvq), with a horizontal approach communications service providers can halve the engineering, planning and management expenses, cut the cost of securing multiple silos by a third, as well as saving on multiple software licenses. This reduces the total cost of ownership by as much as 30%.

Common challenges

The benefits of horizontally integrated infrastructure are clear, so why are some communications service providers shy to move away from silo-based operations? One reason is that, as edge computing strategies became available, a number of companies prematurely tried to adopt a horizontal approach.

Cloud platforms were not fully optimised at the time to function efficiently in the space, so vertical strategies were adopted as a reliable alternative. This has resulted in some service providers feeling stuck with a vertical platform strategy. And for those unfamiliar with horisontal cloud integrations, using multiple vendors may seem a complex venture. However, it is worth taking the time to understand how to build a more open, collaborative architecture, since the end result is more flexible, scalable, efficient and adaptable to performance needs.

As telco providers make strategic and structural innovations to improve the scalability, speed and performance of their network services, many are at a crossroads as they consider their options for edge architecture. By embracing an open hybrid cloud model that’s horizontally integrated across both core and distributed sites, CSPs will be able to manage, execute and automate the applications needed to run these solutions efficiently and cost-effectively across deployment environments. This lays the foundation for future services and business innovation.