Bengaluru: The elite panel of experts assembled at the Bengaluru Tech Summit 2020 have unravelled some interesting insights while addressing the impact of Artificial Intelligence (AI) on healthcare systems, especially during times like the ensuing Covid-19 pandemic.

AI & Robotics Tech Park (aka ARTPARK)

Mr. Umakant Soni, Chairman of AI Foundry and Co-founder & CEO of ARTPARK began the introductory speech with some interesting tidbits about the AI Robotics Tech Park which is a joint initiative of IISc and AI Foundry.

“I have almost 19 years of errors in building technology, products, strategy, AI investing, entrepreneurship and policy making. For the last 10 years, I have been in AI space and started the chatbot company in 2009-10 and then started the first AI-focused venture fund in India called Pi Ventures,” he said.

He goes on to add that ARTPARK is a section 8 (Non-profit) company created to bring Global Industry, Academia, Society and Govt. together for leapfrog innovation in AI & Robotics by IISc and AI Foundry with seed-funding by Dept. of Science and Technology (Govt. of Karnataka) for a $30 million initiative.

Mr. Soni also talks about four major waves of AI evolution in healthcare systems from handcrafted machine learning systems (1995 – 2010) to Deep Learning with predictive AI (2010 – 2020), Common Sense AI with contextual adaptation (2020 – 2040) and finally AGI (2040 onwards).

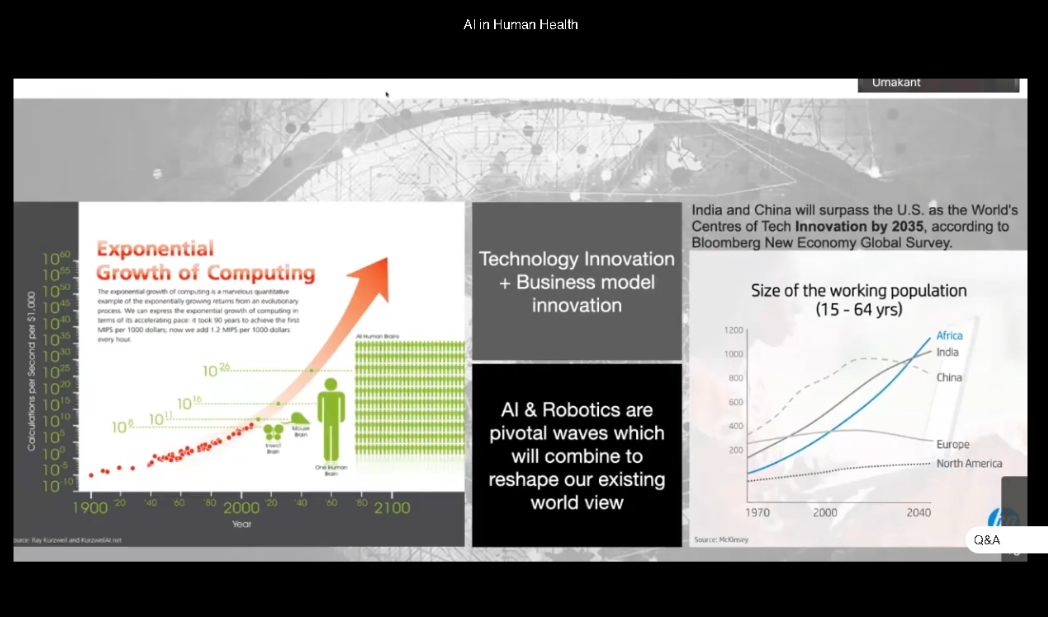

He also shed some light on how end-to-end machine learning has evolved with better error perception by using pre-trained transformer while summing up that the next 15 years would be the era of hyper innovation in AI & Robotics along with exponential growth of computing.

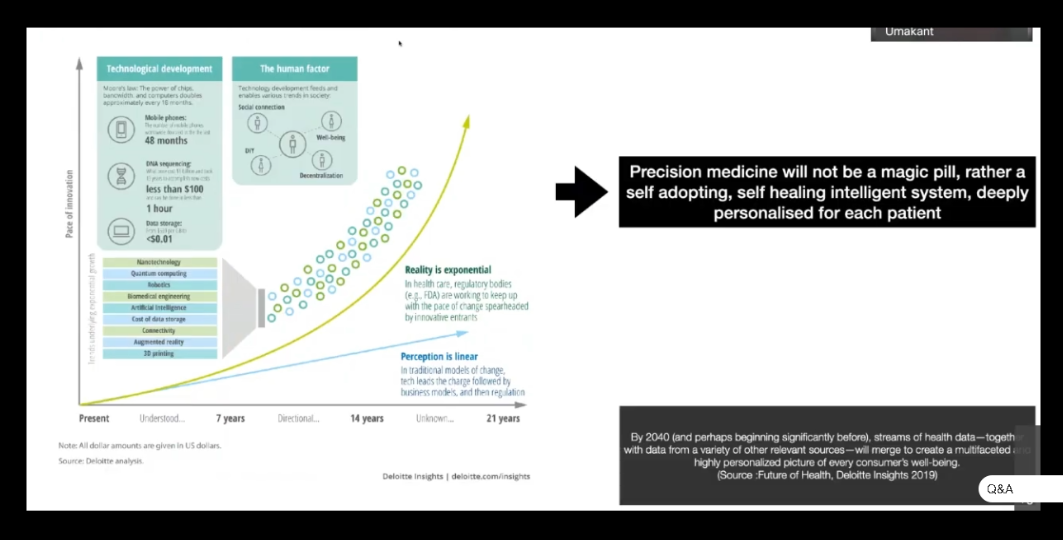

Referring to another presentation slide, Mr. Umakant highlights how precision medicine is going to transform the paradigm of healthcare systems with a self-adopting and self-healing intelligent system that will be personalised for each patient.

Mr. Soni also explains how the impact of AI is tightly integrated with other emerging technologies like quantum computing, bio-medical engineering and nano technology, and how this leads to further challenges in exponential computing.

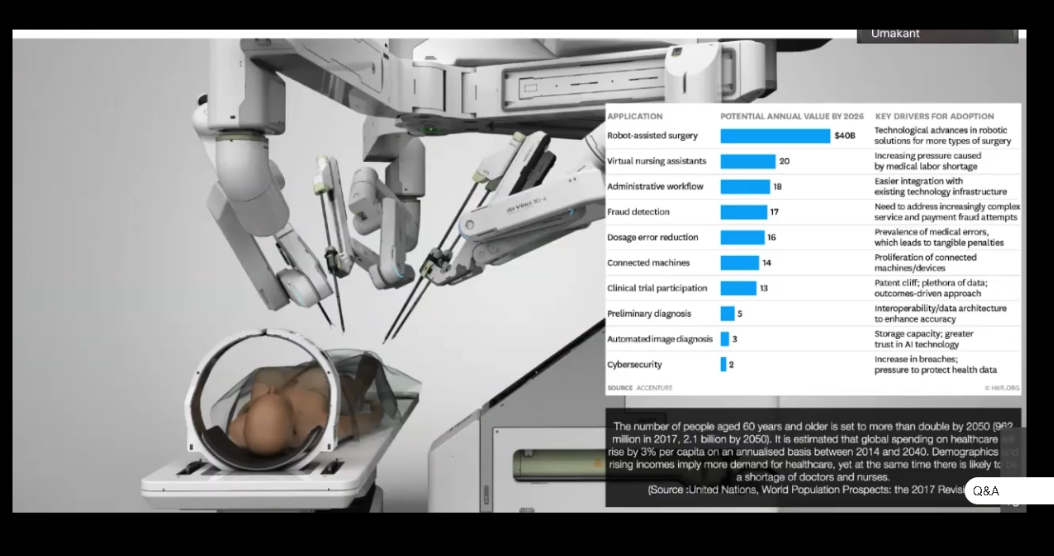

“If we imagine a scenario by 2040, there is one report which says that robots would be doing more surgeries than humans by 2040. And especially as the population is going to age drastically throughout the world and unless we bring in AI and automation in quite a few areas, we may not be able to handle the cost factor,” he said.

He concluded saying that AI will keep on advancing while healthcare and pharma will undergo a massive change as Covid-19 situation is probably going to accelerate it.

The co-panel member list included Dr Regina Barzlay (Delta Electronics Professor at MIT – Computer Science & AI Lab), Kye Andersson (AI Strategist and Major Impact Initiative – AI Sweden), Dr Anurag Agrawal (Director CSIR – Institute of Genomics and Integrative Biology) and Dr Geetha Manjunath (Founder, CEO and CTO of Niramai).

AI and Automation in Healthcare Systems

Dr Regina Barzlay began her speech by explaining what AI can and can’t do for the healthcare systems. She reasons out the objective behind answering this question: “The reason why this question is pertinent today is AI is becoming stronger and stronger every day. But, at the same time we don’t see a lot of AI being implemented in the healthcare system at least in the United States and Europe.”

She broadly discussed the landscape for various AI methods in healthcare while classifying them into three main categories: automating tasks humans can do, automating tasks humans cannot do and deriving new insights.

“If you are looking at the whole landscape of the AI methodology that’s applicable to the healthcare, you can roughly speaking take all these heterogeneous technology and divide them into these three groups,” she said.

She points out that the ‘Automating Tasks Humans can Do’ is currently the biggest bucket among the three while the second classification highlights all those tasks done by the machines such as analysing humongous amounts of data and identifying various subtle patterns.

Speaking about the third classification – Deriving New Insights, she says that there are very few examples at the moment as it is just emerging to the surface. “This is a new system which gives us insights into what is learnt by the AI system using the data available,” she adds.

She sums it up by saying that today we are in the transition phase from the first two categories to the third one which is the future.

Highlighting the automation tasks done by AI today, she points out a few real-life examples like collecting and analysing data from patient records for clinical trials such as cases of breast cancer via a pathological report.

“The pathological report highlights all the features of the disease (breast cancer) with different biological bio-markers, different measurements in size for lymph nodes and the various inherent patterns,” she said.

Highlighting a particular case of breast atypia, she explains how the detection of this disease varies with and without AI. In other words, the detection rate is much higher with the use of AI rather than without it.

She sums it up saying that AI played a vital role in differentiating the victims of breast cancer from cases of breast atypia by analysing the patterns.

Dr Regina also brings up the fact that AI finds its widespread use in predicting Cancer at hospitals today through the use of machine learning. She further explains how the Cancer Detection system works and the various stages of analysis using Standard Architecture Resnet-18 for breast mammogram.

Pointing to a report highlighting the AI software performance factor, she explains how it varies from out-of-the box configuration to generic improvements and customised variants.

Finally, she concludes that the customised versions aka smart models had superior performance or better success rate at evaluating a pathological report.

AI in Predictive Analytics

Dr Regina also pointed out how AI played a crucial role in predictive healthcare systems like predicting outcomes as humans are incapable of handling such complex tasks with accuracy.

“Pathology slides and sequencing info can be used to predict sensitivity to treatment of the disease or even identifying the type of breast cancer,” she added.

Challenges in Predicting Outcomes

Elaborating on the likely challenges in predicting outcomes, Dr Barzlay pointed out that a balance between annotation cost, data size and annotation quality is the key for success.

Pointing to one research article, she explains how the racial bias in an AI algorithm could influence into misleading information or an incorrect treatment or inaccurate prediction of the disease’s outcome.

Dr Regina sums it up by saying that it is crucial to train the AI on the same set of people on whom the diagnosis or tests are being done in order to get accurate results. “The patient demographics, clinical settings and the device used for diagnosis needs to be constant to avoid the AI bias,” she clarifies.

“AI is not about competing with humans. But, it’s about how to find symbiosis which helps to bring human and machine power together to provide better outcomes for patients,” she concludes.

Niramai

“We (at Niramai) are developing a new way of detecting breast cancer early in an affordable, accessible manner using artificial intelligence and thermography which is a new way of sensing,” Dr Geetha said while describing her work with Nirmai.

Implementation of AI-based Systems and Challenges

The panelists also highlighted the various challenges of AI-based systems as it is imperative to share data on a global scale in order to prevent AI bias due to lack of enough data when a smaller population sample size is considered.

Commenting on the lack of response for data collaboration in times of Covid-19 pandemic, Dr Anurag Agrawal had this to say:

“If we were to look at total number of cases and the number of people tested, you would reach very different conclusions about how much the virus has spread when compared to a sample test for measuring antibodies. If you want to measure the antibodies in a sample test, only 10% of the people actually qualify for it.”

“If you want to do RT-PCR then you have to test everybody. So, unfortunately we do not have any real successes to report for Covid-19 and nothing has really worked as much of the collected data is wrong,” he concludes.

Dr Anurag Agrawal also pointed out that traditional systems and Data Scientists actually helped in making better predictions in epidemiology outcomes, especially in times of emergencies.

Adding to Dr Anurag’s perspective, Dr Geetha reiterated that it is important to enable descriptive analytics with AI in thermal imaging for better results as it becomes more convincing for the doctors to rely on such data.

Commenting on the future of healthcare systems, the panelists concluded that health will become a continuous process and every day of your life will be under constant healthcare protection through round-the-clock healthcare surveillance.

To wrap it up, Dr Anurag responded to our Q&A query on ways to address the difference in AI bias when you take a smaller sample versus a large sample of the population:

“AI bias is a very well-known problem. I will take the simplest possible example – If you were to use American X-rays to diagnose Covid because you don’t have any other recorded datasets. When you start applying them to Indian data, you will start seeing problems. Because they have never seen Indian X-rays before which have what are called Dirty Lungs caused due to high-pollution for instance.

“And the infiltrates that you see or that the diagnostics of Covid-19 as seen by the machines are normally very thin in the Indian X-rays with people having Dirty Lungs,” he adds.

“So, unless you have a very large sample encompassing a globe representing a variety of conditions, you will get bias… no matter what you do,” Dr Anurag concludes.